Container Orchestration: Running dotCMS in Kubernetes

Organizations are always looking for ways to make their technology infrastructure more flexible and scalable, and dotCMS is no different. That is why we have invested significant developer time and resources into scaling dotCMS with containers like Docker and Kubernetes, giving customers the opportunity to build with a platform that delivers at higher speeds while consuming fewer resources.

In our upcoming dotCMS 5 Series, dotCMS will start releasing Docker images, like our current .zip and .tar.gz release files, as part of each subsequent dotCMS release. Internally, we have been running and testing dotCMS in Docker for several months now and are in the process of moving a few of our own dotCMS properties to Docker-ized installations.

At the same time, we understand that creating Docker containers is only part of the story. We can’t forget about the orchestration of those containers for manageable and scalable dotCMS environments. This post is intended to prove how our reference dotCMS Docker configuration can be ported into an orchestration environment other than Docker Swarm. It will test our assumptions about how easy it would be for clients to take our reference implementation and port it to the container orchestrator they are using in their infrastructure.

While there are many orchestration choices availability, we chose Kubernetes for this post because of its popularity and applicability to multiple cloud providers as well as on-premise hosting.

How dotCMS Works With Kubernetes

Our current plans are to release a ‘Docker-Compose.yml’ file along with our Docker images as a reference implementation that shows how each container needs to be configured and how the containers depend on each other. We believe that the Docker-Compose file succinctly captures all of the relevant information needed for configuring the containers to work in any orchestration environment. Of course, the Docker-Compose file can be used to run directly in Docker Swarm and Docker Compose. It has been our assumption from the beginning that it would be a trivial matter for somebody to take this reference implementation and create a deployment for the orchestrator that they use in their organization, but is this true for Kubernetes?

Before working through an example, here are the six different containers dotCMS will be providing with each release:

- HAProxy - While not needed for most environments since most will already have a load balancer, it will be provided as part of our reference implementation for a couple of reasons: First, including it allows us to provide containers for the entire stack that can be run with minimal environmental dependencies. This enables quickly reproducing environments for testing and debugging multiple instances of dotcms configured in a cluster. Second, it provides a reference of what configuration settings are needed to match the load balancer settings used in our internal testing.

- dotCMS - The content management system you have come to know and love - the star of this show!

- ElasticSearch - The engine behind much of the awesome search capability in dotCMS. Having ElasticSearch in its own container will allow us to scale it independently of the other parts of the system as well as offload the CPU and memory needed to run it.

- Hazelcast - The default cache provider used by dotCMS to make it extremely scalable. Having a separate caching container allows caching to be scaled independently of the other layers.

- Hazelcast Management Center - As the name suggests, a management center that provides insight into the internal details of the caches and the nodes hosting those caches.

- PostgreSQL - Very popular and capable open source database engine. While most production environments will have their own database servers available, this container is provided for much the same reasons given for HAProxy above. We wanted the full stack containerized for demonstration, testing, and debugging purposes.

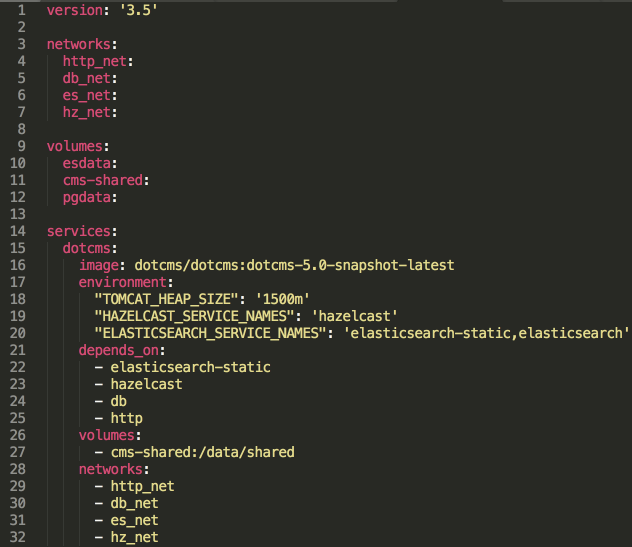

While we did port the entire reference implementation to Kubernetes, for the purpose of our discussion and examples here, we will just focus on the configuration of the dotCMS container. Here is an excerpt of the Docker-Compose file with all of the other service definitions removed except for dotCMS:

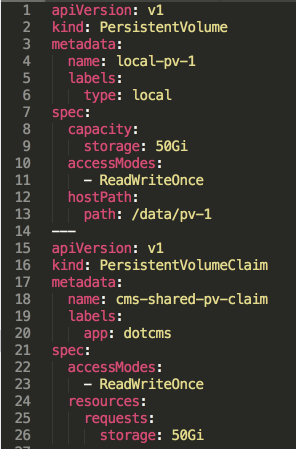

For those of you familiar with Kubernetes, you know that the way Kubernetes handles volumes is a little more involved than what you see in this Docker-Compose file. So, those of you with Kubernetes experience recognize how we have to declare and provision our persistent volumes. In a production scenario, it is likely that “/data” would be a NFS mount. Then we also need to declare a PersistentVolumeClaim as seen below:

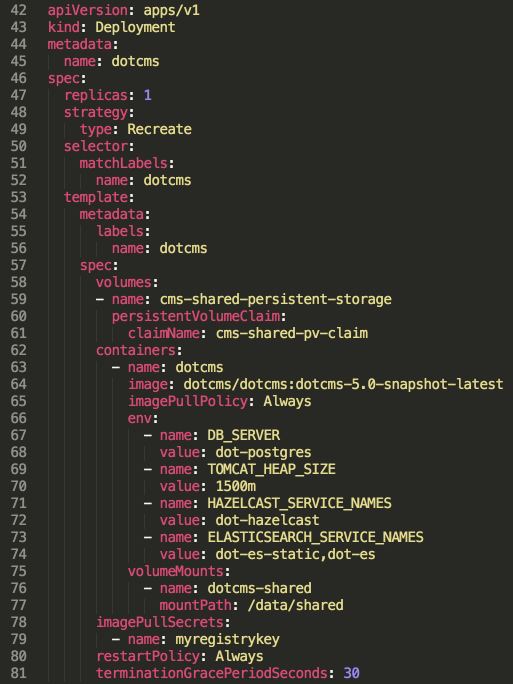

Of course, that PersistentVolumeClaim has to be declared as a volume in our Deployment and then mounted into our container. These difference around volumes are really the only substantive differences in the definition of our Kubernetes deployment:

You may have noticed that in the Kubernetes deployment file that our other services names have changed. This is because Kubernetes automatically adds environment variables to nodes when it starts them up. These variables are based on the service names. So for example, in the Hazelcast node, Kubernetes automatically sets an environment variable named “HAZELCAST_PORT”. This is fine, except that variable name is one that we use internally for dynamic configuration. To avoid this collision, we added a “dot-” prefix to the service name. We also had to abbreviate the ElasticSearch service names because of a fifteen character limitation.

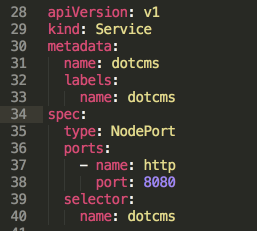

Those of you experienced with Kubernetes also know that this deployment is not exposed or available to be called by other deployments or from outside of the Kubernetes cluster. To make it available, we need to define a service, like this:

Now with this service definition, dotCMS is available to other nodes in the Kubernetes cluster - but how can it be accessed outside of the Kubernetes cluster? The answer is the HAProxy deployment and service. We will not cover the details of that container here, but know that the HAProxy is available outside of the Kubernetes cluster and then proxies requests to our dotCMS service within the Kubernetes cluster.

There you have it! We’ve taken our reference implementation from a Docker-Compose file and ported it over to Kubernetes. While Kubernetes does not native dependency management, one of the reasons for our customized third-party containers is that we have added some custom discovery logic that ensures their dependencies are in place prior to the main process in the container starting up.

Experienced with Kubernetes? Running dotCMS Will Be A Piece Of Cake

Circling back to our original question: Is it easy to port our Docker-Compose implementation to another orchestrator like Kubernetes? If you are already familiar with Kubernetes details like PersistentVolumes, PersistentVolumeClaims, Services, and the Kubernetes API that are used to define Deployments, it really is simple to port our reference implementation to Kubernetes.

If I, as a Kubernetes newbie, can make it work, I am confident that more experienced Kubernete engineers will have no problem at all taking our reference implementation and porting it to Kubernetes and their specific environment.

It is also worth mentioning that I used the Kompose to translate our Docker-Compose file. While Kompose did generate some noise, it also generate Kubernetes deployment files that were very similar to the working files I manually created.

Disclaimer: Some details discussed above are subject to change before the first release of our Docker images and implementation.

Recommended Reading

Benefits of a Multi-Tenant CMS and Why Global Brands Need to Consolidate

Maintaining or achieving a global presence requires effective use of resources, time and money. Single-tenant CMS solutions were once the go-to choices for enterprises to reach out to different market...

Headless CMS vs Hybrid CMS: How dotCMS Goes Beyond Headless

What’s the difference between a headless CMS and a hybrid CMS, and which one is best suited for an enterprise?

14 Benefits of Cloud Computing and Terminology Glossary to Get You Started

What is cloud computing, and what benefits does the cloud bring to brands who are entering into the IoT era?