Virtual Machines vs Containers vs Serverless Computing: Everything You Need to Know

Containers, virtual machines and serverless computing — three terms you’d expect to hear during a Sci-Fi movie — are all part of the enterprise computing debate that asks; What’s the best way to develop, deploy, and manage applications in an era laden with new ideas, devices, and digital experiences?

If you’re navigating this landscape with questions, we’ve got some answers below.

What are Virtual Machines?

A virtual machine, also known as a VM, emulates a real computer that can execute programs and applications without having direct contact with any actual hardware.

Microsoft Azure defines a VM as a, “computer file, typically called an image, that behaves like an actual computer. In other words, a computer is created within a computer.”

How Do Virtual Machines Work?

VMs work by operating on top of a hypervisor, which is, in turn, is stacked up on top of either a host machine or a “bare-metal” (the hardware) host. A hypervisor, also known as a machine monitor, can either be a piece of software, firmware or hardware that enables you to create and run VMs.

Each VM runs its own unique guest operating system, thus enabling you to have a group of VMs sitting alongside each other, each one with its own unique operating system. For example, you can have Linux VM sitting next to a UNIX VM. Each VM carries their own virtualized hardware stack that comprises of network adapters, storage, applications, binaries, libraries and its own CPU.

While VMs provides a significant benefit of being able to consolidate applications onto a single server, which puts the days of running a single application on a single server in the past, it did have its drawbacks.

Since each VM had its own operating system, it can cause significant problems — particularly when projects become large. Having multiple VMs with their owned OS added substantial overheads in terms of RAM and storage footprint. And as a result, it causes issues right throughout the software development pipeline.

The solution? Containers.

What is a Container?

While virtual machines virtualize a machine, a container virtualizes an entire operating system so that multiple workloads can run on a single OS instance. With VMs, the hardware is being virtualized to run multiple OS instances — which slows everything down and gradually increases the total cost of ownership. To avoid all of that, containers leverage one OS, increasing deployment speed and portability while lowering costs.

Like virtual machines, containers provide an environment for microservices to be deployed, managed, and scaled independently — but in a more streamlined fashion as mentioned above.

A container is a Linux-based application that is used to isolate a service and its dependencies into a self-contained unit that you can run in any environment. The sole purpose of containers is to promote efficient use of the allocated server space and resources, thus enabling the isolated processes to run more efficiently and quickly, while giving developers the ability to scale up or down these individual containers very easily.

How Do Containers Work?

Containers, unlike VMs which provide hardware virtualization, provide operating-system-level virtualization. Even though containers are similar to VMs, since they have its own private space for processing, executing commands, mounting file systems, and having its own private network interface and IP address; the significant difference between containers and VMs is that containers share the host’s operating system with other containers. Thus, making them more lightweight.

Each container comes packaged with its own user space to enable multiple containers to run on a single host. And since the OS is shared across all the containers, the components that need to be developed from scratch are the binaries and libraries - which can easily be added by a Docker image (again, we’ll explain this later).

These containers sit on top of a Docker engine, which in turn, sits on top of the host operating system. The Docker engine utilizes a Linux Kernel which allows developers to easily create containers on top of the operating system.

What Is Serverless Computing?

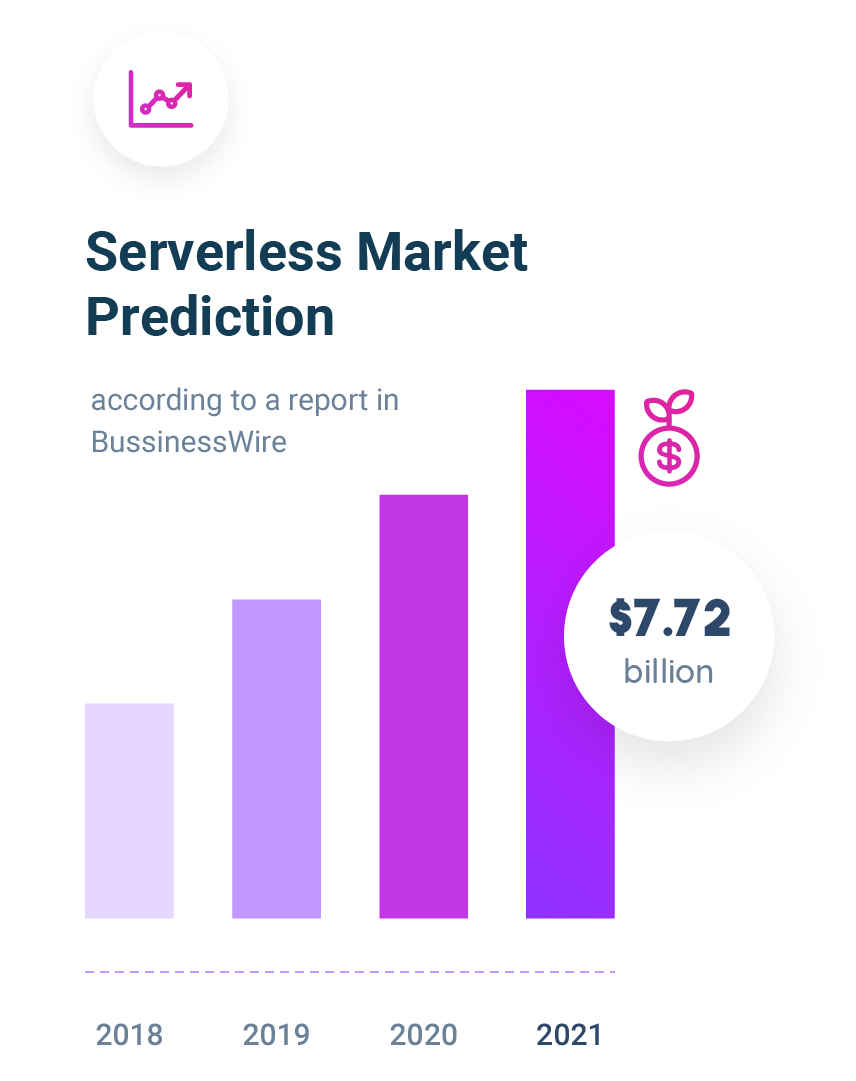

According to a report in BusinessWire, the serverless market is predicted to be worth $7.72 billion by 2021.

A serverless architecture allows you to write code (think C#, Java, Node.js, Python, and the likes), set some simple config parameters, and then upload the “package” on to a cloud-based server thats owned and managed by a third party. You might be familiar with Amazon AWS Lambda, one of the most popular serverless platforms.

Despite its terminology, serverless doesn’t necessarily mean that are no servers involved. Instead, the term serverless describes the way that organizations essentially outsource their servers instead of owning and maintaining their own. Instead, they opt to leverage external, cloud-based servers that are run and maintained by a company like Amazon AWS.

The “package” mentioned earlier is referred to as a function by AWS Lambda. The serverless architecture has helped to introduce us to new concepts like FaaS (Function-as-a-Service) and BaaS (Backend-as-a-Service). The latter being typically used by “rich client” applications like single-page web apps or mobile apps that use a vast ecosystem that is comprised of cloud-based databases and authentication services like Auth0 and AWS Cognito.

As such, serverless computing is also known as Function as a Service (FaaS), as the company in question is simply requesting the functionality of an external server, leaving them “serverless”, but not functionless.

How Does Serverless Computing Work?

Lambda is an example of a Function as a Service (FaaS). With Lambda, you can run a function by calling it from an application that is running on an AWS service like S3 or EC2. On calling the function, Lambda then deploys it in a container which runs until the function has been fully executed. The point to keep in mind is that Lambda takes care of everything from provisioning, deploying and managing the code. All you have to do is upload the code.

Serverless has often been measured against containers since both technologies provide similar benefits in terms of rapid development. However, it is important to note that both serverless computing and containerization do not actually negate each other — they can work in tandem.

Containers and Serverless Computing: Friends, Not Foes

You could easily assume that serverless computing will eliminate the need for software developers to deal with containers directly. After all, all you have to do is write the code, load it to Lambda, and sit back while AWS does all the heavy lifting. But in reality, a totally solely-serverless project would be a tall order for any ambitious enterprise. While AWS Lambda can be an extremely valuable resource, it is far from being an all-round replacement for deploying and managing containers more directly through applications like Docker and Kubernetes.

Serverless computing comes with some very stringent limitations. With Lambda for example, you’re subjected to pre-defined restrictions on size, memory usage and the set time to run a function. And along with the limited list of natively supported programming languages, the practical constraints of wholly operating a system with a serverless architecture becomes quite clear to see1.

To successfully run a serverless architecture, you need to keep functions as small as possible to prevent it from hogging the system’s resources or overloading it. The containers utilized in a serverless environment are not defined or managed by you, they’re instead controlled by the provider who provides you with the ability to execute serverless functions. With the containers beyond your reach, you will not be able to monitor their performance, debug them directly, or scale them as quickly.

With a Docker ecosystem, you are not restricted to a predefined size, memory or a set time to run a function. You can build an application as large and complex as you like it to be while having full control over both individual containers and the overall container system via your container management software.

In short, serverless architecture and containers don’t work best when they’re against each other, they work best when they’re being leveraged in tandem. A Docker-based application is best for large-scale and complex applications, while a serverless architecture is best suited to smaller tasks that can be run in the background or be accessed via external services1.

If you’re running a container-based program, you can outsource certain functions to a serverless platform to help free up resources on the main program.

How dotCMS Users Can Leverage Containers

dotCMS is made up of a set of fully containerized microservices. Plus, with the 2018 release of dotCMS 5.0, dotCMS now provides support for Docker, enabling dotCMS customers to easily jump on the containerization bandwagon. Along with dotCMS’ Docker image, a Docker compose file is also on the horizon for dotCMS users,which enables the Docker image to work with the container management software of your choice.

In a recent dotCMS webinar, Brent Griffin, dotCMS’ Senior DevOps Engineer, provided a demonstration of how dotCMS can be scaled using Docker. In the demonstration, Griffin broke up dotCMS into seven different components, highlighting how one of the components, the Elastic Search functionality, normally comes under heavy load due to numerous content pull requests. This results in the overall system running slow, not because of dotCMS getting taxed, but primarily down to the rising number of Elastic Search queries being backed up in a queue.

Griffin showed that, by containerizing the Elastic Search capability, dotCMS users can compartmentalize that component, integrate it with the other components, and then scale it to allow it to work more efficiently without overloading the overall system.

dotCMS Docker images have been designed as “Orchestrator Agnostic”, so brands can use Kubernetes, Swarm, or any other orchestrator. Additionally, dotCMS supports complete internal testing against both Swarm and Kubernetes based orchestrators. dotCMS uses can also externalize services, like an ElasticSearch layer for example, making them individually scalable.

In short, dotCMS helps its customers leverage containerization to run websites and applications more efficiently, and at a lower cost than ever before — all while delivering a competitive customer experience.

The Future is Contained

As previously mentioned, containers aren’t new — but they are shaping the future of the enterprise digital landscape. Because containers serve microservices so well, decrease costs, and speed up the time to market, you can expect to see more brands leaving virtual machine behind in favor of Docker, Kubernetes, and Amazon AWS-powered containers.

Some will supplement those containers with serverless functionality where it makes sense, and other won’t. But the point is, the future is contained, and so it’s time to think about how you’re going to compartmentalize your digital presence for the benefit of your developers and customers.

---

References

1"Containers vs. Serverless Computing | Rancher Labs." 9 Oct. 2017, https://rancher.com/containers-vs-serverless-computing/. Accessed 8 Aug. 2018

Recommended Reading

Benefits of a Multi-Tenant CMS and Why Global Brands Need to Consolidate

Maintaining or achieving a global presence requires effective use of resources, time and money. Single-tenant CMS solutions were once the go-to choices for enterprises to reach out to different market...

Headless CMS vs Hybrid CMS: How dotCMS Goes Beyond Headless

What’s the difference between a headless CMS and a hybrid CMS, and which one is best suited for an enterprise?

14 Benefits of Cloud Computing and Terminology Glossary to Get You Started

What is cloud computing, and what benefits does the cloud bring to brands who are entering into the IoT era?